Why Upgrade Your .NET Core API from 2.x to 3.1?

With the release of .NET Core 2.1, the .NET Core ecosystem finally had a decently stable and feature-complete release offering LTS support. .NET Standard 2.0 brought with it thousands of new APIs that provided most of the parity the day-to-day enterprise needed for specifically building Web Applications and APIs. Prior to .NET Core 2.x it was a pretty bumpy ride going from ASP.NET 5, .NET Core 1.x, the XPROJ extensions, etc. Now it feels like the ecosystem has landed somewhere safe and sound. You likely played with .NET Core 2.0, but didn’t upgrade because it wasn’t LTS, similar to the late .NET Core 3.0 release. Tempting to move right away, but your Microsoft radar-sense I’m sure was tingling to tell you that you know better than to go down that route. But now .NET Core and ASP.NET Core 3.1 LTS is finally here and ready to roll. However, its probably not news toyou that much like the .NET Core 2.0 focus on WEB API parity, the .NET Core 3.x focus is directed towards desktop development. Why should you be excited or even spend the time to migrate from .NET Core 2.1 to .NET Core 3.1 (after all we stuck to LTS versions so I know I still have 3 years…right)?

Migrate NOW….errr Maybe?

Your first inclination is right. As the developer of a .NET Core 2.1 Web Application, there are no obvious reasons to push that you upgrade immediately. Take a breath of fresh air and plan the right time to upgrade that fits with your schedule. If you stuck to .NET Core 2.1 because it was LTS, the biggest upgrades you’ll get from a feature perspective would have been in .NET Core 2.2 (non-LTS). The good news is you have some time to plan it out. If you are on .NET Core 2.2 already then the urgency is a little greater because official support has already run out.

If the concepts of LTS are newer to you, then let this be a lesson that sticking to LTS versions of .NET will provide you a lot more flexibility to manage the onslaughts of incoming business features and requests, reducing the urgency to get off unsupported versions of the software.

Upgrading can be pretty simple depending on the type of project you have. If you have an internal project that is not shared, it will be very straightforward. If you are supporting a library across multiple standard versions, then it gets interesting. Some resources I suggest:

- Official Documentation for 2.2 -> 3.0

- Breaking Changes (2.1 -> 2.2, 2.2 -> 3.0, 3.0 -> 3.1)

- Definitive Migration Guide from Andrew Lock (this is a MUST READ!)

All that being said, let’s talk about the high-level features (and pitfalls) that you’ll unlock with this upgrade from the perspective of building a web application.

.NETStandard 2.1 Support

.NET Core 3.0 brought with it support for .NETStandard 2.1. The general guidance is to not use the latest .NETStandard version for your library development but rather the lowest version that contains the APIs you need. .NETStandard 2.1 is no different. There may be no reason for you to update anything at all… that is unless you are referencing ASP.NET libraries from a particular .NET Core version, then this can really hurt a lot. See Andrew Locks’s guide above.

Additionally, at the enterprise level, we have been using .NETStandard 2.0 support to build out HTTP Clients and other utility libraries for use in .NET Core and existing legacy .NET Framework Web Applications. You should be aware that by migrating to .NETStandard 2.1, you effectively drop all support for .NET Framework.

FrameworkReferences

Finally, we have “FrameworkReferences“. The idea of a PackageReference NuGet meta resource is a bust. We suffered through several iterations of that simply not working, making this a refreshing change for once… but buyer beware!

When building an ASP.NET Core project, my CSPROJ file in 2.x would look like this:

<Project Sdk="Microsoft.NET.Sdk.Web">

<PropertyGroup>

<TargetFramework>netcoreapp2.1</TargetFramework>

</PropertyGroup>

<ItemGroup>

<PackageReference Include="Microsoft.AspNetCore.App" />

</ItemGroup>

</Project>The versioning of PackageReference always made me shutter, but felt like a necessary evil sometimes. Moving forward with ASP.NET Core CSPROJ files now just simply remove the PackageReference, since the entire framework usage is implied based on Sdk=”Microsoft.NET.Sdk.Web”.

<Project Sdk="Microsoft.NET.Sdk.Web">

<PropertyGroup>

<TargetFramework>netcoreapp3.1</TargetFramework>

</PropertyGroup>

</Project>Nice and simple… I like it 🙂

The major change comes when I don’t reference the “Web” SDK specifically. For example, writing a .NETStandard library that you previously referenced Microsoft.* packages to hook into the middleware pipeline, you’ll now remove references to those NuGet packages (as you won’t find 3.x versions of them, they are baked into SDK). Then you’ll need to pull out a framework reference:

<Project Sdk="Microsoft.NET.Sdk">

<PropertyGroup>

<TargetFramework>netcoreapp3.1</TargetFramework>

</PropertyGroup>

<ItemGroup>

<FrameworkReference Include="Microsoft.AspNetCore.App" />

</ItemGroup>

</Project>Noticed what I just slipped in as well? The target framework must be updated to “netcoreapp3.1” in order to make use of the FrameworkReference. Now that has some interesting ramifications! Mainly this is no longer a .NETStandard reference, meaning it can only be used in a .NET Core App itself. Ruh-roh… that might have a dramatic effect on your various consumer platforms?

While it seems very concerning, this change actually helps to drastically clarify and enforce the usage of certain libraries. Even though I had a .NETStandard library, it was using .NETStandard packages containing contracts and interfaces that SHOULD only ever execute on a particular version of ASP.NET Core. I may have felt that I had portability before, that I never really had. In libraries that I continued to maintain as .NETStandard to use in .NET Framework, this change helps me clearly identify some leaking dependencies to make it purely portable.

Additionally, by default, I would typically start with a .NETStandard class library as a business layer in most of my web apps. No reason that layer cannot be netcoreapp3.1… and in fact probably should be. The implications are that for the updated libraries that now enforce netcoreapp3.1, all my consuming dependencies in lower layer projects of the app will need to be updated to be netcoreapp3.1 themselves (i.e. if you have a netstandard2.0 project it cannot reference a netcoreapp3.1 – restore will fail).

System.Text.Json

For the first time in a while, Newtonsoft.Json is not the default JSON library in .NET anymore. For so long, Newtonsoft has been the “goto” library for all your JSON needs – no questions asked. For a long time, I appreciated not having to think about it, and not have to have any debates about which library to pick. Well, that is no longer the case.

Newtonsoft.Json was used quite extensively and deeply in the bowels of ASP.NET and in order to unlock some of the dependencies latching onto this System.Text.Json was born (and I hear it was worked on in collaboration with James Newton-King). It is supposedly more performant, light-weight and ASP.NET Core optimized. Migration to it seems relatively easy, with most of the attribute annotations remaining similar (meaning just a change of namespaces).

It sounds great. Even so, the enormous usage and scattered configurations that are Newtonsoft specific across the enterprise really make this a tough sell. You may make the decision to just stick with Newtonsoft.Json which is entirely valid and easily added (I know I did for a bunch of projects across our organization).

Docker Optimizations

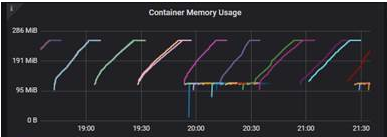

If there is any compelling reason to upgrade to .NET Core 3.x sooner rather than later this might be the one. If you’re running a .NET Core 2.x app in a Linux container with low memory, you no doubt ran into cyclical crashing loops as a result of improper heap allocation, because the memory limits were not properly detected.

The following illustrates the behavior we saw in our organization on a number of services suffering from this:

A decent workaround was to disable the server-side garbage collection feature (or increase the memory):

<Project Sdk="Microsoft.NET.Sdk.Web">

<PropertyGroup>

<TargetFramework>netcoreapp2.1</TargetFramework>

<ServerGarbageCollection>false</ServerGarbageCollection>

</PropertyGroup>

</Project>In general, getting this small and deadly bug out of the way and additional enhancements for running in a container are very welcomed additions.

Faster & Smaller SDK

As a result of switching to the “FrameworkReferences” (both explicit and implicit with the SDK type), much of the packages that used to be restored via NuGet are readily available for your usage because you have the SDK already installed.

This is a small benefit to the local developer who really only downloaded it a few times and it was cached. However, you might find this is a small but quick win on your build server depending on if you cached NuGet packages between builds (building inside a container makes that a bit more challenging).

Additionally, when publishing the application the entire package will be somewhat smaller since those DLLs can be effectively left out when not building a standalone EXE (oh you can do that now too natively – a single file to execute either standalone or dependent).

Cross-Platform Diagnostic Tools

To be totally honest, I’m not sure I’d know how to effectively debug a cross-platform issue with .NET Core without stumbling all over the place. I generally develop and run my apps locally using a Windows machine, and once getting close, I test it out by building and running it in a Linux Docker container. For the most part it has always “just worked” (YAY!). There have been a few misses I’ve had to debug around API differences such as dealing with timezones between platforms, but nothing major that the error output was not obvious about.

With the introduction of these great new cross-platform and CLI built-in diagnostic tools, I feel much more capable and in control on short notice. I haven’t had much time to explore them yet, but plan too in the coming months. Have you tried them out?